What is Edge Computer Vision and how to get started?

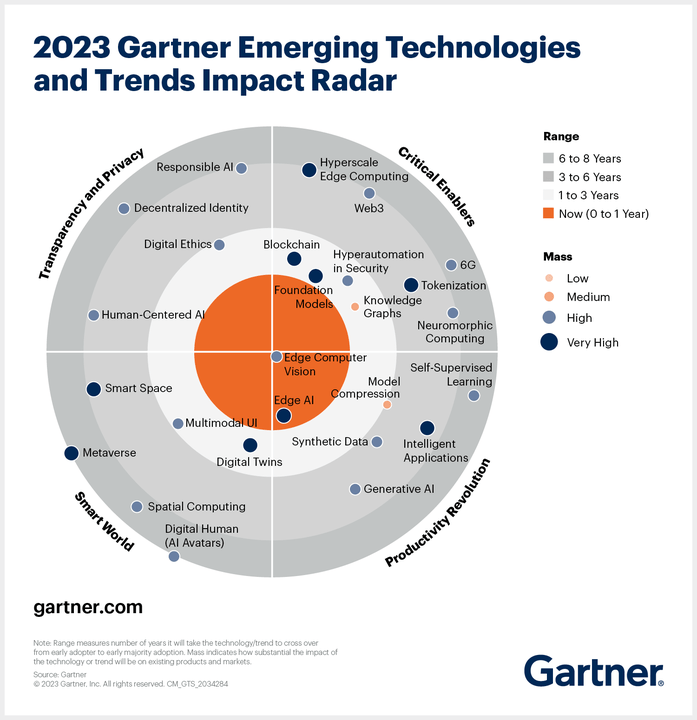

Edge computer vision has emerged as a transformative technology, with Gartner recognizing it as one of the top emerging technologies of 2023. Within the realm of edge computing, edge computer vision stands out as a high-impact application that should start transitioning from early adopters to a broader market. However, Edge Computer Vision is a challenging topic due to the limitation in computing resources usually required to run cutting-edge computer vision models.

Understanding Edge Computer Vision.

Before diving into the specifics of edge computer vision, it's important to understand its foundations. Computer vision is the process of enabling computers to analyze and interpret visual data, similar to how humans perceive images or videos. It has a wide range of applications, including automatic inspection, object recognition, surveillance, and autonomous vehicles.

Edge computing, on the other hand, involves processing data at or near the source, rather than relying on centralized cloud-based servers. By bringing computation closer to the data, edge computing offers advantages such as reduced latency, improved response times, enhanced security, and lower bandwidth requirements.

When we combine computer vision with edge computing, we get edge computer vision, a powerful combination that enables real-time analysis and decision-making at the edge devices themselves. This means that visual data can be processed and analyzed locally, without the need for constant communication with remote servers. Edge computer vision opens up new possibilities for industries by providing faster insights, improved privacy, and the ability to operate in resource-constrained environments.

Unlocking Vision on the Edge.

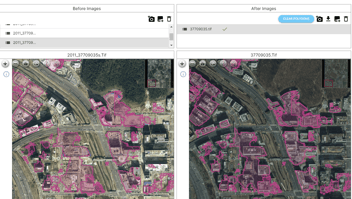

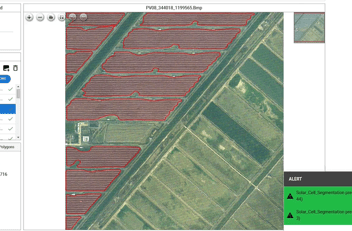

Deep Block's innovative Machine Learning Operations (MLOps) platform simplifies the training and deployment of computer vision models, empowering businesses to harness the power of remote sensing imagery analysis, among many other applications. MLOps is the practice of automating machine learning workflows, from data preparation to deployment. Analysts can then streamline the identification of patterns, trends, and anomalies in the collected data, providing a level of accuracy and insight that was previously impossible.

Deep Block usually provides a traditional computer vision model deployment, where the inference is performed in the cloud. However, due to a growing demand for running analysis in near-real-time or real-time, the company has started exploring new possibilities for deploying these models directly on the edge. Traditionally, computer vision models built using deep learning techniques have demanded significant computational resources, posing challenges when it comes to deploying them on edge devices with limited capabilities. However, with the advent of new computer vision technologies, these models can be optimized and compressed to meet the computational constraints of edge devices.

Deep Block Technology addresses this challenge by allowing the training and deployment of efficient and compact computer vision models (small size, low frame rate). Indeed, the prerequisite for deploying computer vision models on the edge involves striking a balance between model complexity, accuracy, and computational efficiency. Deep Block enables the creation of lightweight models that can perform inference tasks effectively on edge devices. These models are designed to extract relevant features from visual data while minimizing the computational burden.

Compared to models not deployed on the edge, edge computer vision models developed using Deep Block offer several advantages. Firstly, they can operate in real-time or near-real-time, providing instant insights and enabling immediate actions. Secondly, they offer enhanced privacy and security as sensitive data is processed locally without the need for transmitting it to remote servers. Lastly, edge deployment reduces dependency on network connectivity, making it suitable for applications in remote areas or environments with limited internet access.

Empowering Defense: The Role of Edge Computer Vision in Drone Warfare.

The recent conflict between Russia and Ukraine has highlighted the significant role of Unmanned Aerial Vehicles (UAVs), commonly known as drones, in modern warfare. This large-scale war between two major nations has witnessed the utilization of advanced weaponry, including drones and sophisticated software systems. Numerous reports have shed light on the key aspects of this conflict, emphasizing the following points:

- The value of cost-effective multicopter drones: The abundance of affordable multicopter drones has proven advantageous in both reconnaissance and combat operations. These drones offer a cost-effective solution, enabling warfighters to deploy a large number of expendable drones rather than relying solely on expensive, larger drones. Cost efficiency is a crucial factor in warfare, and small UAVs that can be acquired at a low cost and deployed in large quantities have become highly effective military assets.

- Evolving capabilities of small UAVs: Small multicopter drones have revolutionized the landscape of warfare by transitioning from simple reconnaissance tools to highly capable devices capable of carrying weapons, throwing grenades, and integrating various sensors. The ongoing conflict between Russia and Ukraine has demonstrated the effectiveness of small unmanned aerial vehicles in neutralizing air defense networks, disrupting enemy radar detection, and functioning as potent reconnaissance and attack instruments. Both countries have employed DJI drones from China, highlighting the prominence of small UAVs in modern warfare.

The integration of Edge Computer Vision technology plays a crucial role in enhancing the capabilities of drones in warfare. This technology addresses challenges related to electronic warfare, antennas, and supply logistics that arise during conflicts. In times of war, supply routes and trade channels are severely restricted, making it challenging to procure high-tech equipment and maintain robust communication systems. The deployment of small UAVs connected to a centralized control center, coupled with artificial intelligence technology, can be hindered by electronic warfare equipment.

The transmission of electromagnetic waves during communication between small UAVs and command centers can be detected by enemy forces, potentially compromising the drone's location. Furthermore, wireless communication infrastructure often becomes damaged or limited during warfare. As drones are already being weaponized in the Russian-Ukrainian conflict, their piloting is currently carried out by operators on the battlefield. However, future developments point toward automated piloting and intelligent drones.

However, the integration of drones with centralized control centers faces numerous challenges due to the aforementioned issues. Consequently, technological advancements will lead to drones capable of independently recognizing situations and conducting combat operations without the need for constant communication with a central control center. All information processing will occur onboard the drone, necessitating the incorporation of computer vision technology into the drones themselves.

Computer vision technology enables drones to perceive and interpret visual data from their surroundings. By equipping drones with computer vision capabilities, they can identify objects, detect threats, navigate through complex environments, and make informed decisions in real-time. This enhanced autonomy empowers drones to operate effectively even in scenarios where communication with a central control center is compromised or limited.

The application of computer vision in defense and drone warfare opens up a wide range of possibilities. Drones equipped with computer vision can perform autonomous reconnaissance missions, identify and track targets, assess battlefield situations, and provide valuable intelligence to military personnel. They can also assist in target acquisition, precise weapon delivery, and post-strike damage assessment.

Looking ahead, the integration of computer vision with edge computing technology offers even greater potential for drones in defense applications. Edge computing allows the processing of visual data directly on the drone or in its immediate vicinity, reducing reliance on cloud-based servers and minimizing latency. This capability is particularly beneficial in scenarios where real-time decision-making is critical, such as in surveillance, target tracking, and threat detection.

The use of edge computer vision in drones for defense purposes paves the way for advanced capabilities and operational efficiency on the battlefield. As technology continues to evolve, we can expect further advancements in the autonomy, intelligence, and performance of drones, enabling them to play an increasingly vital role in modern warfare.

The recent conflict between Russia and Ukraine has underscored the significance of drones in contemporary warfare. The integration of Edge Computer Vision technology enhances the capabilities of drones, allowing them to operate autonomously, make informed decisions, and effectively fulfill various defense roles. With the ongoing advancements in computer vision and edge computing, the future of drones in defense and warfare appears promising, presenting opportunities for increased efficiency, enhanced situational awareness, and improved operational outcomes on the battlefield.

How to choose the best edge deployment method?

Edge computer vision can be deployed in various forms, depending on the specific requirements of the application:

- On-Device Deployment: In this form of deployment, the computer vision model is directly installed and executed on edge devices such as cameras, smartphones, or embedded systems. On-device deployment offers real-time processing capabilities, enabling applications like facial recognition for access control, mobile augmented reality, and real-time object detection for autonomous robots.

- Edge Server Deployment: In edge server deployment, a dedicated server is placed at the edge of the network, closer to the data sources. The server performs the computationally intensive tasks of processing and analyzing visual data, while the edge devices primarily handle data collection and transmission. This deployment is suitable for scenarios like smart cities, where multiple edge devices generate vast amounts of data that need to be processed efficiently for tasks such as traffic monitoring, crowd analysis, and environmental monitoring.

- Hybrid Cloud-Edge Deployment: In a hybrid deployment, a combination of cloud-based servers and edge devices work together to process visual data. Edge devices perform initial processing and filtering, while more complex analysis and storage are offloaded to the cloud. This deployment offers a balance between edge processing capabilities and the scalability and storage capacity of the cloud. It is commonly used in applications like video surveillance systems, where real-time detection happens at the edge, while cloud servers provide long-term storage and post-event analysis.

What are the benefits of Edge Computer Vision?

Deploying computer vision models on the edge brings several benefits and opens up a wide range of potential use cases:

- Real-Time Decision-Making: Edge computer vision enables instant decision-making by processing visual data at the source. This is crucial in time-sensitive applications such as infrastructure inspection, where drone imagery can be analyzed on the fly to provide immediate responses to maintain critical environments.

- Enhanced Privacy and Security: By processing data locally, edge computer vision ensures that sensitive information remains within the edge devices, reducing the risk of data breaches during transmission. This makes it suitable for applications where privacy and security are paramount, such as surveillance systems and healthcare.

- Reduced Bandwidth Requirements: Edge deployment minimizes the need for transmitting large volumes of data to remote servers, resulting in reduced bandwidth requirements. This is advantageous in scenarios with limited network connectivity or high costs associated with data transfer, such as remote industrial sites or rural areas.

- Resource-Constrained Environments: Edge computer vision is particularly beneficial in resource-constrained environments where access to powerful cloud servers may be limited. By leveraging the computational capabilities of edge devices, industries like agriculture, oil and gas, and manufacturing can analyze visual data locally and make real-time decisions without relying on constant internet connectivity.

- Industrial Automation and Quality Control: Edge computer vision finds extensive use in industrial automation, where it enables real-time monitoring and analysis of manufacturing processes. By detecting anomalies, defects, or quality issues on the assembly line, it helps improve efficiency, reduce downtime, and enhance overall product quality.

Edge computer vision, powered by technologies like Deep Block, offers tremendous potential for industries seeking real-time insights, enhanced privacy, and efficient processing of visual data. As the range of edge computing expands and mass adoption becomes a reality, businesses across various sectors can leverage edge computer vision for applications ranging from autonomous vehicles to smart cities, from healthcare to industrial automation. By embracing the power of edge computer vision, companies can unlock new possibilities and gain a competitive edge in today's data-driven world.